- February 18, 2025

- Posted by: Goutham Ramesh

- Categories: Application Development, Artificial Intelligence

Introduction

Overview

The landscape of application development has undergone a seismic shift with the advent of AI technologies, particularly with the introduction of ChatGPT by OpenAI. ChatGPT, a variant of the powerful GPT (Generative Pre-trained Transformer) models, took world by storm, showcasing the remarkable capabilities of conversational AI and made it accessible to Developer community.

Spring AI which this blog is about focuses on integrating these AI capabilities into the Spring Framework, which allows developers to incorporate sophisticated AI functionalities into their applications effortlessly.

Purpose

The integration of OpenAI’s ChatGPT with Spring AI isn’t just about enhancing application intelligence; it’s about staying at the forefront of technological innovation. As AI continues to evolve, it’s becoming imperative for developers to master these tools to build more dynamic, responsive, and intelligent applications.

This blog aims to guide developers through setting up a chat client within a Spring AI environment, utilizing OpenAI’s ChatGPT to automate interactions and enrich user engagement. By doing this, developers can leverage this powerful combination and can transform their applications into more intuitive and efficient platforms which uses AI

What is Spring AI?

Spring AI is a Java library that provides a simple and easy-to-use interface to interact with LLM models. It provides higher-level abstractions to interact with various LLMs such as OpenAI, Azure Open AI, Hugging Face, Google Vertex, Ollama, Amazon Bedrock, Deep Seek etc.

In short, Spring AI addresses the fundamental challenge of AI integration: Connecting your enterprise Data and APIs with AI Models and provides abstractions on output formatting, prompts etc.

Prerequisite

In order to use OpenAI, we need to first create an OpenAI Platform account and create a Key (This cost minimum of 10$ and you can use it for months, a very small amount to pay for knowledge enhancement)

- Go to OpenAI Platform and create an account.

- In the Dashboard, click on the API Keys from the left navigation menu and create a new API key. (Pls keep this API key secure, and we will be using this later in the blog).

Spring AI in action with openAI ( Chat Client)

Create Spring AI Project

Let’s create a new Spring Boot project using Spring Initializr. (Let’s call this project “SpringAIRnd” )

- Go to Spring Initializr

- Select Web, and OpenAI starters

Update the maven file (pom.xml) as shown below

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.4.2</version>

<relativePath /> <!-- lookup parent from repository -->

</parent>

<groupId>com.gouthamramesh</groupId>

<artifactId>SpringAIRnd</artifactId>

<version>1</version>

<name>SpringAIRnd</name>

<description>Rnd for Spring AI </description>

<url />

<licenses>

<license />

</licenses>

<developers>

<developer />

</developers>

<scm>

<connection />

<developerConnection />

<tag />

<url />

</scm>

<properties>

<java.version>22</java.version>

<spring-ai.version>1.0.0-M5</spring-ai.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-openai-spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-text</artifactId>

<version>1.10.0</version>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>${spring-ai.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

POM Description

Parent Configuration

- spring-boot-starter-parent: Specifies that this project inherits default configurations from Spring Boot’s parent POM. The version specified is 3.4.2, ensuring the project uses this specific release of Spring Boot, which helps manage dependency versions and configuration settings automatically.

Project Metadata

- groupId: com.gouthamramesh – The identifier for the organization or group that created the project.

- artifactId: SpringAIRnd – The unique base name of the primary artifact generated by this project.

- version: 1 – The version of this iteration of the artifact.

- name: SpringAIRnd – A human-readable name for the project.

- description: “Rnd for Spring AI” – Brief description of the project, indicating it’s a research and development project around Spring AI.

Properties

- java.version: 22 – Specifies that the project is using Java 22.

- spring-ai.version: 1.0.0-M5 – Defines the version of Spring AI dependencies, suggesting it’s using a milestone (M5) release.

Dependencies

- spring-boot-starter-web: Includes all the necessary dependencies to create a web-based application including things like Spring MVC, and default configurations for embedded server setup (Tomcat).

- spring-ai-openai-spring-boot-starter: Dependency that would configure and provide services for integrating with OpenAI APIs, simplifying the use of AI technologies within a Spring application.

- spring-boot-starter-test: Provides dependencies necessary for testing Spring Boot applications, such as JUnit, Spring Test, and Mockito.

- commons-text: Apache Commons library that provides additional text operations that are not found in the standard Java library.

Dependency Management

- spring-ai-bom: Uses a “Bill of Materials” (BOM) POM from Spring AI to control the versions of dependencies related to Spring AI across multiple modules or projects without having to specify them in each project.

Build Configuration

- spring-boot-maven-plugin: Configures the Spring Boot Maven plugin for the project, which simplifies the packaging and execution of Spring Boot applications, including functionalities like generating an executable jar.

The above POM file sets the groundwork for a Spring Boot application designed to explore and implement AI functionalities, focusing on integrations with external AI services like OpenAI.

Update application.properties

Add the following lines to application properties

spring.application.name=SpringAIRnd

spring.ai.openai.api-key=<<YOUR OPEN AI KEY>>

spring.ai.openai.chat.model=gpt-4

spring.ai.openai.chat.temperature=0.7

Properties description

- spring.application.name=SpringAIRnd:

Purpose: Sets the name of the Spring Boot application. This name can be used for various purposes, such as identifying the application in logs or within a Spring Cloud context.

Usage: This property is useful for administrative and maintenance purposes, helping to identify instances of the application during deployment and in service logs.

- spring.ai.openai.api-key=<<YOUR OPEN AI KEY>>:

Purpose: Specifies the API key for accessing OpenAI services. The placeholder <<YOUR OPEN AI KEY>> should be replaced with an actual API key obtained from OpenAI.

Usage: This key is essential for authenticating requests made from your application to OpenAI, enabling the use of AI models like GPT-4 for generating text, completing tasks, etc.

- spring.ai.openai.chat.model=gpt-4:

Purpose: Defines which OpenAI model to use for chat or text generation purposes. Here, it specifies gpt-4, indicating that the application should use the GPT-4 model.

Usage: GPT-4 is a powerful language model capable of understanding and generating human-like text based on the input provided. This setting directs the application to leverage GPT-4 for tasks requiring natural language processing.

- spring.ai.openai.chat.temperature=0.7:

Purpose: Sets the temperature for responses from the GPT-4 model. Temperature affects the randomness of the output generated by the model. A higher temperature results in more diverse and less predictable outputs, while a lower temperature makes the model’s responses more deterministic and conservative.

Usage: A temperature of 0.7 provides a balance, allowing for creative yet coherent outputs. This setting is particularly useful when the application needs to generate responses that are neither too standard nor too unpredictable.

Summary

These properties are crucial for integrating and customizing the behaviour of OpenAI’s GPT-4 model within a Spring Boot application. They allow you to control how your application interacts with OpenAI, influencing both the operational characteristics (e.g., through the API key) and the behavioural aspects (e.g., model choice and response creativity) of the AI integration.

Create a REST Controller Class to receive chat message.

package com.gouthamramesh.ai.spring.rnd.controller;

import java.util.Map;

import org.springframework.ai.chat.client.ChatClient;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

@RestController

@RequestMapping("/api/openai")

public class OpenAIController {

private final ChatClient chatClient;

@Autowired

private PromptService promptService;

public OpenAIController(ChatClient.Builder chatClientBuilder) {

this.chatClient = chatClientBuilder.build();

}

@GetMapping("/chat")

public Map<String, String> chat(@RequestParam String message) {

String response = chatClient.prompt().user(message).call().content();

return Map.of("question",message, "response", response);

}

}

Controller description

Package

- com.gouthamramesh.ai.spring.rnd.controller:

- This is the namespace under which the controller class resides. It indicates that the class is part of a project developed by “Goutham Ramesh”, focused on AI functionalities within a Spring environment, likely as part of a research and development initiative.

Imports

The controller imports several Java and Spring Framework classes that facilitate its functionality:

- java.util.Map: Used to construct a simple data structure for returning key-value pairs from controller methods.

- org.springframework.ai.chat.client.ChatClient: A Class representing a client capable of interfacing with AI chat services.

- org.springframework.web.bind.annotation.*:

- @RestController: Indicates that the class serves RESTful web services and that the methods’ return values should be bound to the web response body.

- @RequestMapping: Declares that all handler methods in this controller will handle requests that are routed under the specified path, in this case, /api/openai.

- @GetMapping: Marks a method to handle GET requests on the specified URL paths.

- @RequestParam: Indicates that a method parameter should be bound to a web request parameter.

Controller Class Definition

- OpenAIController:

- A class marked with the @RestController annotation, indicating it’s a REST controller where handler methods assume @ResponseBody semantics by default (i.e., returning data rather than a view).

- @RequestMapping(“/api/openai”):

- Specifies that the URL path for all request handling methods in this controller will be prefixed with /api/openai.

Constructor and Properties

- private final ChatClient chatClient;:

- A final property that holds an instance of ChatClient. Being final, it must be initialized either via the constructor or at the point of declaration.

- Constructor OpenAIController(ChatClient.Builder chatClientBuilder):

- The constructor takes a ChatClient.Builder as an argument, which is used to build and initialize the chatClient property. This design indicates a dependency on the ChatClient class, which is central to the controller’s functionality, suggesting the use of a builder pattern for creating a ChatClient instance.

Controller Method

- @GetMapping(“/chat”):

- A handler method mapped to handle GET requests on /chat, making it accessible via /api/openai/chat.

- public Map<String, String> chat(@RequestParam String message):

- This method accepts a single string parameter message from the query parameters of the GET request.

- It uses the chatClient to send this message to an AI service, which processes the message and returns a response. The method specifically calls prompt().user(message).call().content(), a chain of method calls to set up the prompt, execute the call, and retrieve the content of the response.

- The response is returned as a Map with two entries: “question” (the original message) and “response” (the AI-generated answer).

Summary

This controller is streamlined to provide an endpoint for interacting with an AI chat service. It handles web requests, processes them using an AI client, and returns the responses in a straightforward, easy-to-consume format. The use of ChatClient implies that the application is integrating with sophisticated AI services, in this case OpenAI.

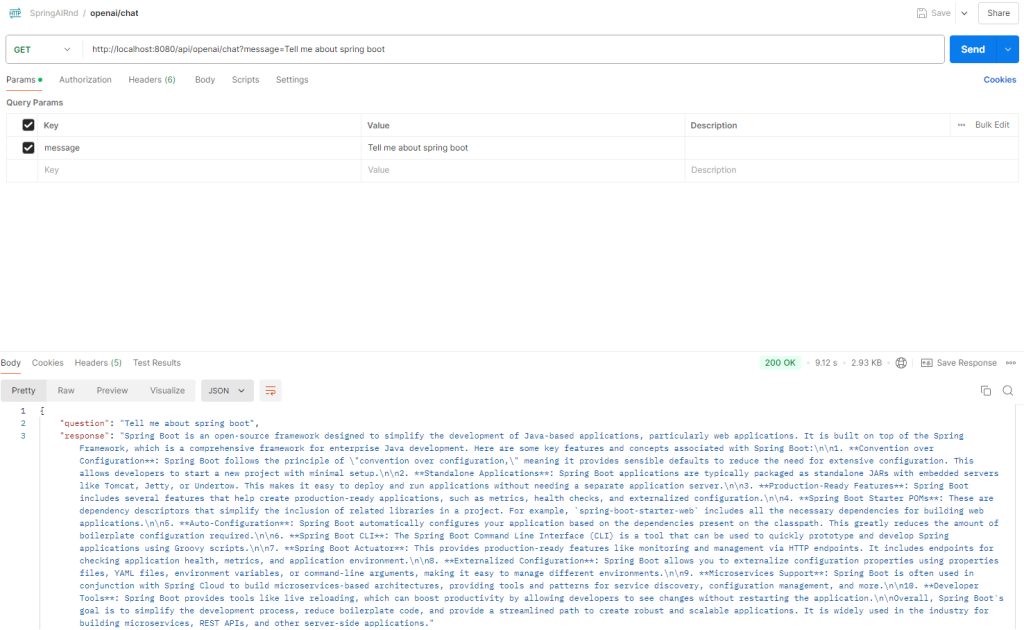

Let us run this

Now we can run the spring application and test the chat API use Postman or Curl

Summary

You have the first running Spring AI App. is this not easy ! Now let us add a bit of more sophistication.

Prompt Templates

What is a Prompt?

In the context of generative AI, a prompt is the text input or instruction that you provide to an AI model to generate a response. Here’s a closer look at what a prompt is and how it works:

- Input for AI Models:

A prompt serves as the initial context or question that guides the AI model in producing its output. It can be a simple question, a detailed instruction, or even a piece of text that the model continues or elaborates on. - Context and Direction:

The quality and structure of the prompt significantly influence the response. By carefully crafting a prompt, you set the context and expectations, helping the AI generate more accurate and relevant responses. - Dynamic Interaction:

In interactive applications (like chatbots), each user input acts as a prompt, initiating a new cycle of interaction where the AI processes the prompt and generates a corresponding reply. - Customization:

Prompts can be tailored with specific language, examples, or instructions to steer the AI toward a desired style or outcome. This is particularly useful when you need the AI to perform specialized tasks like summarization, translation, or creative writing.

In essence, a prompt is the essential starting point for any interaction with a generative AI, determining the direction and nature of the AI’s response.

Spring AI Prompt Templates

Prompt templates in Spring AI serve as pre-defined blueprints for constructing the text inputs (or “prompts”) that you send to generative AI models. Here’s what they are and why they’re useful:

- Reusable Structure:

Prompt templates provide a consistent format for your AI requests. By defining a template, you can reuse the same structure across multiple requests, ensuring that your instructions to the AI remain consistent. - Dynamic Content Insertion:

Templates often include placeholders for variable content. At runtime, you can replace these placeholders with actual data or context-specific information. This approach lets you tailor the prompt to each specific use case while keeping the overall format intact. - Maintainability and Clarity:

Using templates makes your code cleaner and easier to manage. Instead of hardcoding long or complex prompt strings throughout your code, you centralize the prompt logic in one place. This separation of static and dynamic content can simplify updates and maintenance. - Standardization Across Interactions:

In applications that interact with generative AI in multiple ways (like chatbots, summarizers, or content generators), prompt templates help standardize how you instruct the AI. This can lead to more predictable and reliable outputs.

Prompt Templates in Action

Lets see how to use prompt templates in order to do that we will create a text in a property file , create a service that returns a prompt object which can be used in a controller .

Prompt.properties

Now Lets creates a new properties file called “prompt.properties” and add the following line

joke.promt=Tell me a joke about {subject}

PromptService

Lets create a “PromptService” class , which will read the above property and then create a prompt object

package com.gouthamramesh.ai.spring.rnd.service;

import java.util.Map;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.ai.chat.prompt.PromptTemplate;

import org.springframework.context.annotation.PropertySource;

import org.springframework.core.env.Environment;

import org.springframework.stereotype.Service;

/**

*

*/

@Service

@PropertySource("classpath:prompt.properties")

public class PromptService {

private final Environment env;

public PromptService(Environment env) {

this.env = env;

}

public Prompt getPromptTemplate(String promptKey, Map<String, Object> promptParams) {

// get the prompt template string from the propertyfile usning the prompt key

String promptTemplateString = env.getProperty(promptKey);

// create a new prompt template

PromptTemplate promptTemplate = new PromptTemplate(promptTemplateString);

// create a new prompt

Prompt prompt = promptTemplate.create(promptParams);

return prompt;

}

}This class, PromptService, is part of the service layer in your Spring AI application. Its main purpose is to generate prompts dynamically based on templates stored in a properties file. Here’s a detailed explanation of its components and functionality:

Package and Imports

- Package Declaration:

package com.gouthamramesh.ai.spring.rnd.service;

This places thePromptServiceclass within the service layer of your project. - Imports:

java.util.Map: Used for passing parameters to populate the prompt template.org.springframework.ai.chat.prompt.PromptandPromptTemplate: Classes from the Spring AI library that help in creating and managing AI prompts.org.springframework.context.annotation.PropertySource: Used to specify an external properties file (prompt.properties) from which prompt templates are loaded.org.springframework.core.env.Environment: Provides access to environment variables and properties.org.springframework.stereotype.Service: Marks the class as a Spring-managed service component.

Annotations

@Service: Indicates that this class is a service component in the Spring context. It will be automatically detected during component scanning.@PropertySource("classpath:prompt.properties"): Specifies that theprompt.propertiesfile (located in the classpath) should be loaded. This file contains prompt template strings identified by keys.

Constructor

public PromptService(Environment env):- The constructor accepts an

Environmentobject, which is automatically injected by Spring. - The

Environmentis used to read properties from the loadedprompt.propertiesfile.

- The constructor accepts an

Core Method: getPromptTemplate

- Method Signature:

public Prompt getPromptTemplate(String promptKey, Map<String, Object> promptParams) - Functionality:

- Retrieve Template String:

- The method first retrieves a prompt template string from the properties file using the provided

promptKey. String promptTemplateString = env.getProperty(promptKey);

- The method first retrieves a prompt template string from the properties file using the provided

- Create a PromptTemplate:

- It then creates an instance of

PromptTemplateusing the retrieved string. PromptTemplate promptTemplate = new PromptTemplate(promptTemplateString);

- It then creates an instance of

- Generate a Prompt:

- Using the

promptTemplate, it creates aPromptobject by populating it with the provided parameters (promptParams). Prompt prompt = promptTemplate.create(promptParams);

- Using the

- Return the Prompt:

- Finally, the generated prompt is returned.

- Retrieve Template String:

Controller

Now lets add a method to the controller created earlier , this method uses this prompt service to create a prompt and call Open AI , the revised and updated controller is

@RestController

@RequestMapping("/api/openai")

public class OpenAIController {

private final ChatClient chatClient;

@Autowired

private PromptService promptService;

public OpenAIController(ChatClient.Builder chatClientBuilder) {

this.chatClient = chatClientBuilder.build();

}

@GetMapping("/chat")

public Map<String, String> chat(@RequestParam String message) {

String response = chatClient.prompt().user(message).call().content();

return Map.of("question",message, "response", response);

}

@GetMapping("/joke")

public Map<String, String> joke(@RequestParam String subject) {

Prompt prompt = promptService.getPromptTemplate("joke.promt", Map.of("subject", subject));

String response = chatClient.prompt(prompt).call().content();

return Map.of("subject",subject, "response", response);

}

}This above code defines a Spring Boot REST controller named OpenAIController that was created earlier

New Fields and Dependency Injection

@Autowired private PromptService promptService;:

This field is automatically injected by Spring’s dependency injection mechanism.PromptServiceis responsible for creating dynamic prompts (from a properties file), allowing for customized AI interactions.

Endpoint: /joke

@GetMapping("/joke"):

This method is mapped to HTTP GET requests at the URL/api/openai/joke.- Method Signature:

public Map<String, String> joke(@RequestParam String subject):@RequestParam String subject:

The method expects a query parameter namedsubjectin the HTTP request.

- Functionality:

- Create a Custom Prompt:

The method usespromptService.getPromptTemplate("joke.promt", Map.of("subject", subject));to retrieve a pre-defined prompt template (identified by the key"joke.promt") from the properties file and populate it with thesubject. This dynamic prompt is then used for the AI request. - Invoke Chat Client with Custom Prompt:

The populated prompt is passed to the chat client withchatClient.prompt(prompt).call().content();to generate a joke based on the given subject. - Return a Map:

It returns a map with the"subject"and the AI-generated"response", which is then sent back as JSON.

- Create a Custom Prompt:

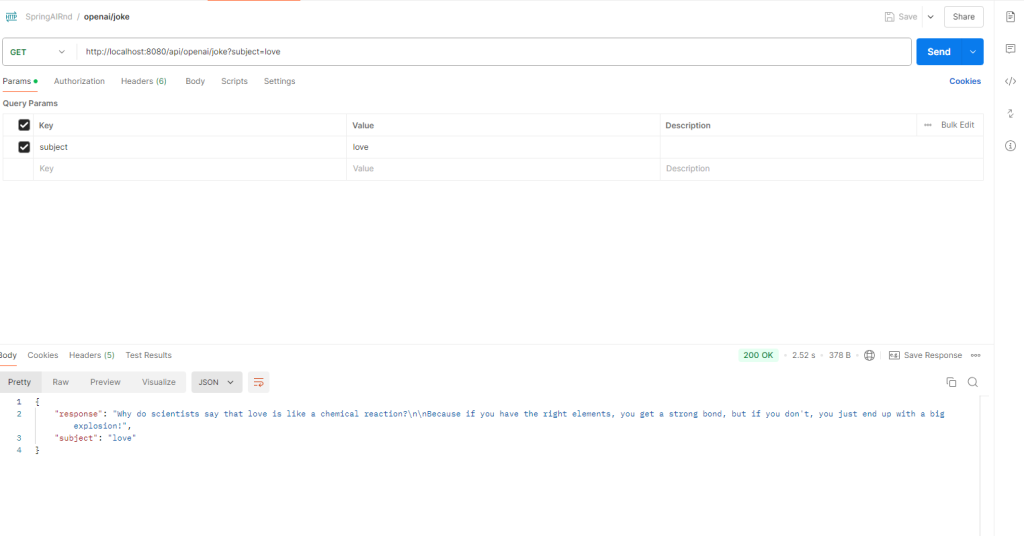

Let us run this

Now we can run the spring application and test the chat API use Postman or Curl

Prompt templates and specifying roles

We can use prompt templates to specify the role of user and LLM by use of System messages and User Messages.

prompt.properties

Now let us create two more properties in the “prompt.properties” one for System and one for user

spring.system.prompt=you are a spring framework expert , if the {subject} is not a spring framework related question, say you cant help

spring.user.prompt= Tell me about {subject}

Have a close look at system prompt , we are setting the role of an LLM and telling it that its a spring expert and if the subject is anything other than spring pls say you cant help.

This context setting is very powerful and can be used to eliminate unwanted response from LLM

PromptService

Lets add a method in “PromptService” to process system message and user message separately

public Prompt getPromptTemplate(String systemMesageKey, String promptKey, Map<String, Object> params) {

// get the prompt template string from the propertyfile usning the prompt key

String promptTemplateString = env.getProperty(promptKey);

String systemPromptTemplateString = env.getProperty(systemMesageKey);

StringSubstitutor substitutor = new StringSubstitutor(params, "{", "}");

String systemMessageString = substitutor.replace(systemPromptTemplateString);

String userMessageString = substitutor.replace(promptTemplateString);

UserMessage userMessage = new UserMessage(userMessageString);

SystemMessage systemMessage = new SystemMessage(systemMessageString);

Prompt prompt = new Prompt(List.of(systemMessage, userMessage));

return prompt;

}Explanation

This method constructs a composite prompt by dynamically merging two templates—a system prompt template and a user prompt template—with provided parameters. Here’s a detailed breakdown of its functionality:

- Retrieve Template Strings:

- User Prompt Template

String promptTemplateString = env.getProperty(promptKey);- This line fetches the user prompt template from the environment (typically from a properties file) using the provided

promptKey.

- System Prompt Template:

String systemPromptTemplateString = env.getProperty(systemMesageKey);- Similarly, this line retrieves the system prompt template using the

systemMesageKey.

- User Prompt Template

- Substitute Parameters into Templates:

- A

StringSubstitutoris created with the givenparamsmap and delimiters{and}:StringSubstitutor substitutor = new StringSubstitutor(params, "{", "}");

- System Message String:

The substitutor replaces any placeholders in the system prompt template with corresponding values fromparams:String systemMessageString = substitutor.replace(systemPromptTemplateString);

- User Message String:

Similarly, it processes the user prompt template:String userMessageString = substitutor.replace(promptTemplateString);

- A

- Create Message Objects:

- UserMessage:

AUserMessageobject is instantiated with the fully substituted user message string:jUserMessage userMessage = new UserMessage(userMessageString);

- SystemMessage:

ASystemMessageobject is created using the substituted system message string:SystemMessage systemMessage = new SystemMessage(systemMessageString);

- UserMessage:

- Combine Messages into a Prompt:

- Finally, both the system and user messages are combined into a single

Promptobject:Prompt prompt = new Prompt(List.of(systemMessage, userMessage));

- This composite prompt is then returned as the output of the method.

- Finally, both the system and user messages are combined into a single

Summary

- Purpose:

The method dynamically builds a prompt by fetching two templates, substituting runtime values into those templates, and then combining the resulting messages into a singlePromptobject. - Key Steps:

- Retrieve template strings using keys.

- Substitute parameters into these templates using

StringSubstitutor. - Create

UserMessageandSystemMessageobjects from the substituted strings. - Combine these messages into a

Promptthat is returned.

This approach allows you to maintain and modify prompt templates externally while dynamically creating rich, context-aware prompts at runtime.

Controller

Now let us add a method to controller to consume this service

@GetMapping("/springexpert")

public Map<String, String> springexpert(@RequestParam String subject) {

Prompt prompt = promptService.getPromptTemplate("spring.system.prompt", "spring.user.prompt",Map.of("subject", subject));

String response = chatClient.prompt(prompt).call().content();

return Map.of("subject",subject, "response", response);

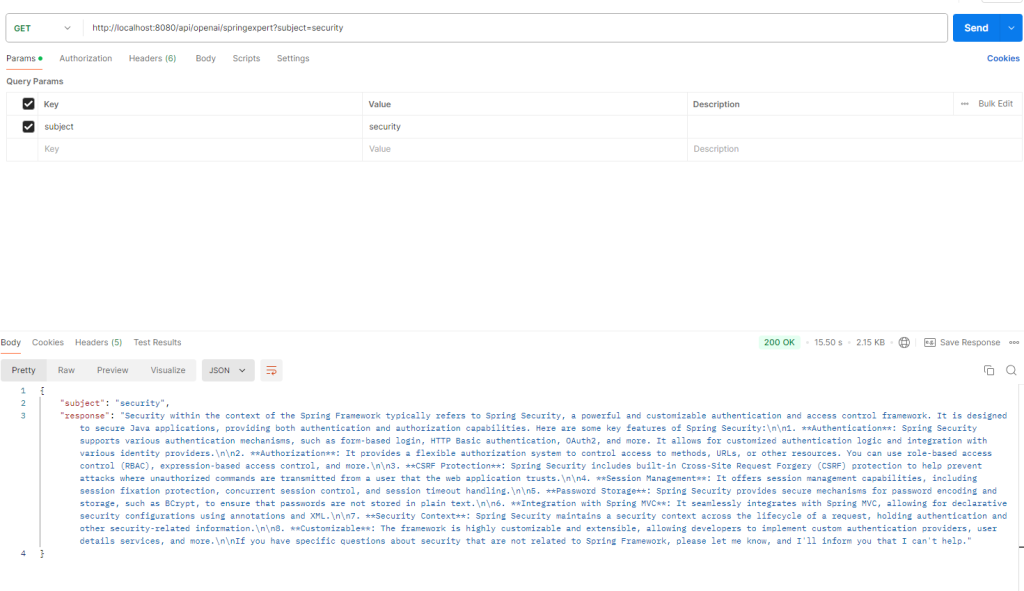

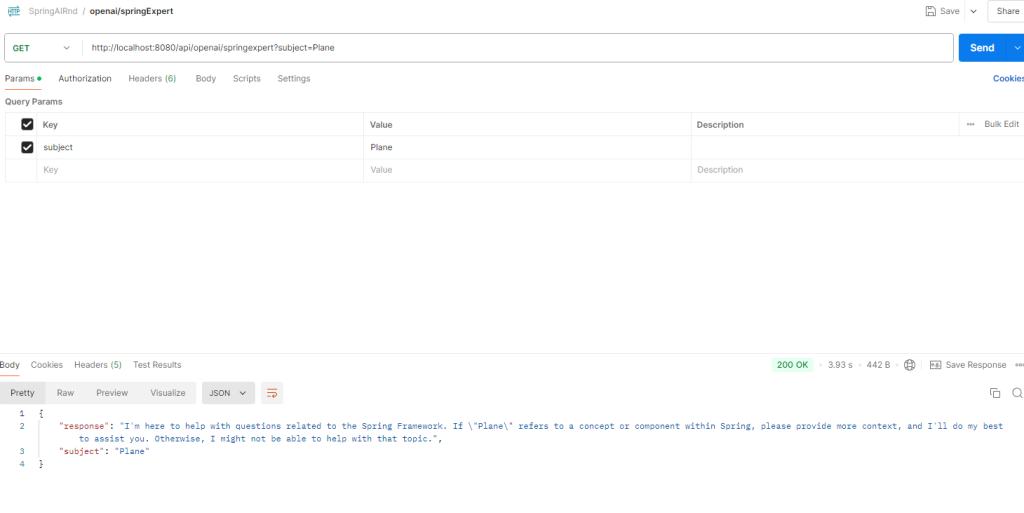

}Lets run this

Let us run by asking about “security”

Now lets ask about something that is not related to spring

You just saw how setting role helps!

OutputConverters

In the previous examples, we get the response from LLMs as Strings. We can use OutputConverters to parse the response and extract the required information in the desired format.

As of now, Spring AI provides the following type of OutputConverters:

BeanOutputConverter

- Purpose: Converts the raw AI response into a Java Bean (i.e., a well-defined Java object).

- Usage:

- When the response from the AI service is structured (for example, in JSON format) and corresponds to a specific Java class, the BeanOutputConverter can be used to automatically map the fields from the response to the properties of your Java Bean.

- This approach allows for type-safe interactions, letting you work with a structured object that represents the data without manual parsing.

- Example:

If you have aTechExpertResponseclass with fields likeanswerandconfidence, the BeanOutputConverter will parse the AI output and populate an instance of this class, making it easier to use in your application logic.

MapOutputConverter

- Purpose: Parses the AI response and converts it into a Java Map (typically a

Map<String, Object>). - Usage:

- This converter is useful when the response is semi-structured or when you don’t have a predetermined Java class to map the data to.

- It allows dynamic access to the response data via key-value pairs, which can be particularly useful for responses with varying fields or when you need to quickly inspect the contents without a fixed schema.

- Example:

If the AI response returns different fields based on context, using a MapOutputConverter lets you access these fields using standard map operations, such asget("answer")or iterating over the key set.

ListOutputConverter

- Purpose: Converts the AI response into a List, typically used when the response is an array or collection of items.

- Usage:

- When the expected output from the AI service is a list of items (such as multiple suggestions, responses, or elements), the ListOutputConverter can parse the response into a Java List.

- This is beneficial for scenarios where you need to process or iterate over multiple elements returned by the AI.

- Example:

If your AI service returns a set of recommendations or a series of data points, using the ListOutputConverter will give you a List where each element can be a primitive type, a Map, or even a Bean, depending on how the response is structured.

In the following example lets use BeanOutputConverter!

prompt.properties

Add the following template string to prompt.properties

### Spring System Prompt

spring.user.prompt.format = {system} . now tell me about {subject } Respond in JSON format: {format}

your final “prompt.properties” will look like this

joke.promt=Tell me a joke about {subject}

spring.system.prompt=you are a spring framework expert , if the {subject} is not a spring framework related question, say you cant help

spring.user.prompt= Tell me about {subject}

### Spring System Prompt

spring.user.prompt.format = {system} . now tell me about {subject } Respond in JSON format: {format}

Model Class for Output (TechExpertResponse)

Create a model class for output ( This is the bean which will hold the response)

/**

*

*/

package com.gouthamramesh.ai.spring.rnd.output.model;

/**

*

*/

public class TechExpertResponse {

private String subject;

private String response;

public TechExpertResponse() {

super();

}

public TechExpertResponse(String subject, String response) {

super();

this.subject = subject;

this.response = response;

}

public String getSubject() {

return subject;

}

public void setSubject(String subject) {

this.subject = subject;

}

public String getResponse() {

return response;

}

public void setResponse(String response) {

this.response = response;

}

}

PromptService

Add the following method to “PromptService” and resolve imports

public Prompt getPromptTemplateFormated(String systemMesageKey, String promptKey,

BeanOutputConverter<TechExpertResponse> outputConverter, String subject) {

Map<String, Object> params = new HashMap<String, Object>();

params.put("subject", subject);

// get the system string from the property file

String systemPromptTemplateString = env.getProperty(systemMesageKey);

StringSubstitutor substitutor = new StringSubstitutor(params, "{", "}");

String systemMessageString = substitutor.replace(systemPromptTemplateString);

// get the prompt string from the property file

params.put("system", systemMessageString);

params.put("format", outputConverter.getFormat());

String promptTemplateString = env.getProperty(promptKey);

var userPromptTemplate = new PromptTemplate(promptTemplateString);

Prompt prompt = userPromptTemplate.create(params);

return prompt;

}

This method, getPromptTemplateFormated, dynamically constructs a fully formatted prompt by merging two template strings from your properties file with runtime parameters. Here’s a step-by-step explanation of what the method does:

- Parameter Overview:

systemMesageKey: The key to look up a system-level prompt template from the properties file.promptKey: The key to look up the main (user) prompt template from the properties file.outputConverter: ABeanOutputConverter<TechExpertResponse>instance, which provides a specific output format (via itsgetFormat()method) to be included in the prompt.subject: A dynamic value (e.g., a topic or keyword) that will be inserted into the prompt templates.

- Building the Parameters Map:

- A new

HashMapcalledparamsis created to hold all the key-value pairs that will be substituted into the templates. - The

subjectis immediately added to this map:Map<String, Object> params = new HashMap<>(); params.put("subject", subject);

- A new

- Processing the System Message Template:

- The method retrieves the system-level prompt template string using

env.getProperty(systemMesageKey). This string may include placeholders (e.g.,{subject}) for dynamic content. - A

StringSubstitutoris then instantiated with theparamsmap and custom delimiters{and}. It replaces the placeholders in the system template string with actual values from the map:String systemPromptTemplateString = env.getProperty(systemMesageKey); StringSubstitutor substitutor = new StringSubstitutor(params, "{", "}"); String systemMessageString = substitutor.replace(systemPromptTemplateString);

- The method retrieves the system-level prompt template string using

- Updating Parameters for the User Prompt:

- The formatted system message (now with placeholders replaced) is added back into the

paramsmap under the key"system". - The method then retrieves the format for the output by calling

outputConverter.getFormat()and adds it to the map under the key"format". This format likely defines how the response should be structured.params.put("system", systemMessageString); params.put("format", outputConverter.getFormat());

- The formatted system message (now with placeholders replaced) is added back into the

- Creating the User Prompt:

- The method retrieves the user prompt template string using the

promptKeyfrom the properties file. - A new

PromptTemplateobject is created using this template string:String promptTemplateString = env.getProperty(promptKey); var userPromptTemplate = new PromptTemplate(promptTemplateString);

- The

createmethod ofPromptTemplateis then called with the updatedparamsmap. This method replaces any placeholders in the user prompt template with the corresponding values fromparams, resulting in a fully constructedPromptobject:Prompt prompt = userPromptTemplate.create(params);

- The method retrieves the user prompt template string using the

- Returning the Final Prompt:

- The fully formatted

Promptobject is returned from the method.

- The fully formatted

Summary

- Dynamic Configuration: The method uses keys to look up template strings from a properties file, allowing you to manage prompt templates externally.

- Parameter Substitution: It leverages

StringSubstitutorto replace placeholders (e.g.,{subject}) in the system message template with runtime values. - Enhanced Prompt Creation: By inserting both the formatted system message and the output format (from

outputConverter) into the parameters map, the method ensures that the user prompt template is enriched with all the necessary context. - Final Output: The result is a

Promptobject that encapsulates all the dynamic information, ready to be used in an AI chat or text generation process.

This method effectively modularizes prompt construction, making it easier to update and manage prompts without hardcoding them in your codebase.

Controller

Add the following endpoint/Method to controller

@GetMapping("/structured/springexpert")

public TechExpertResponse springexpertStructuredResponse(@RequestParam String subject) {

var outputConverter = new BeanOutputConverter<TechExpertResponse>(TechExpertResponse.class);

Prompt prompt = promptService.getPromptTemplateFormated("spring.system.prompt","spring.user.prompt.format",outputConverter, subject);

String response = chatClient.prompt(prompt).call().content();

return outputConverter.convert(response);

}

Explanation

This method is a REST endpoint mapped to /structured/springexpert that processes a GET request and returns a structured TechExpertResponse object. Here’s a step-by-step breakdown of its functionality:

- Mapping and Input Parameter:

- The

@GetMapping("/structured/springexpert")annotation maps this method to handle GET requests sent to the/structured/springexpertURL. - It expects a query parameter named

subject, which is provided by the client to specify the topic for the technical expert response.

- The

- Output Converter Initialization:

- A

BeanOutputConverter<TechExpertResponse>is created. This converter is responsible for converting the raw string response from the AI service into a well-structuredTechExpertResponseJava object. It is parameterized with theTechExpertResponse.classto ensure type-safe conversion.

- A

- Prompt Generation:

- The method calls

promptService.getPromptTemplateFormated(...), passing:"spring.system.prompt": A key to fetch the system prompt template from a properties file."spring.user.prompt.format": A key to fetch the user prompt template (or format) from a properties file.- The

outputConverteris also passed to embed any formatting instructions. - The

subjectprovided in the request, which is inserted into the prompt.

- This call returns a

Promptobject that encapsulates a formatted message ready to be sent to the AI.

- The method calls

- Calling the AI Chat Client:

- The generated

Promptis then used with thechatClientto call the AI service. The call chain (chatClient.prompt(prompt).call().content()) sends the prompt to the AI and retrieves the resulting output as a string.

- The generated

- Response Conversion and Return:

- Finally, the method uses the

outputConverterto convert the raw string response into aTechExpertResponseobject. - The structured

TechExpertResponseis then returned to the client as the response of the GET request.

- Finally, the method uses the

Summary

This method demonstrates a structured way to interact with an AI service:

- Input: It receives a subject to query about technical expertise.

- Processing: It dynamically constructs a prompt using pre-defined templates and runtime parameters, calls the AI service with that prompt, and converts the AI’s raw response into a structured Java bean.

- Output: It returns a

TechExpertResponseobject, providing clients with a clear and well-defined response.

By leveraging a BeanOutputConverter, this endpoint ensures that responses are consistently structured and easy to work with, making the integration of AI into your application both robust and maintainable.

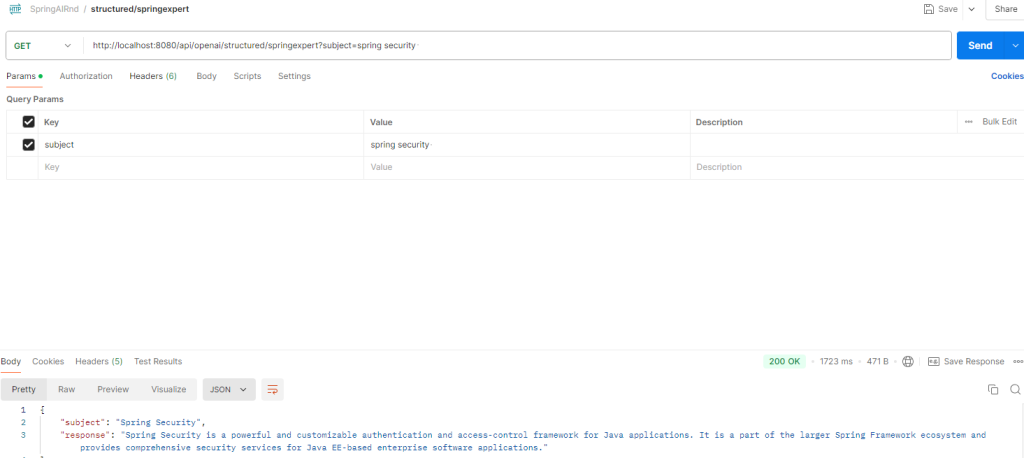

Lets run this

Now lets run this and ask about Spring security

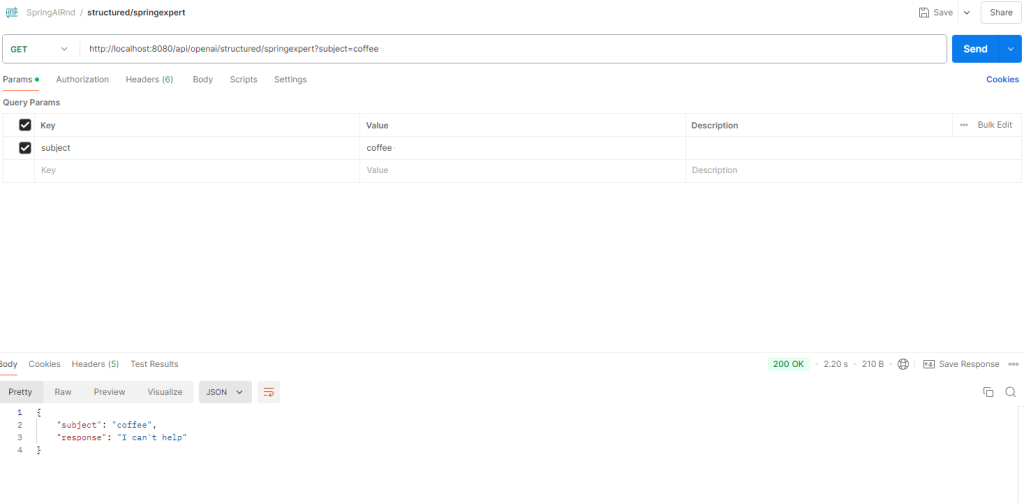

Now let us ask about coffee which is not related to Spring security

Conclusion

In this blog, we’ve journeyed through the integration of Spring AI with OpenAI, demonstrating how to build dynamic and intelligent applications using a modern Spring Boot framework. We’ve seen how to configure your project with essential dependencies, manage prompt templates via property files, and even leverage AI to generate responses tailored to your needs—like jokes based on a subject.

Notably, we used AI to explain the code in a way that simplifies complex concepts and makes advanced functionality accessible. Rather than running away from AI, we encourage you to embrace it as a powerful tool that can elevate your development process. By integrating AI into your applications, you open up a world of innovative possibilities where human creativity meets machine efficiency.

Embrace AI, experiment with it, and let it be a partner in your coding journey, just as it has been in crafting this blog. ( Yes I Used AI to generate explanations of the code! )